Privacy - Part 1

# Side Note

Nothing to Hide

The highest-ranked non-Chinese city is London, also notorious for its strict surveillance of public spaces, with 73 cameras per 1,000 people in rank 3.

“The net effect is that

if you are a law-abiding citizen of this country going about your business and personal life, you have nothing to fear about the British state or intelligence agencies listening to the content of your phone calls or anything like that.“Indeed you will never be aware of all the things that these agencies are doing to stop your identity being stolen or to stop a terrorist blowing you up tomorrow.”

- William Hague, British Minister in charge of (GCHQ) UK intelligence and security organisation (2013)

“So when people say that to me I say back,

arguing that you don’t care about privacy because you have nothing to hide is like arguing that you don’t care about free speech because you have nothing to say. ”…

Because privacy isn’t about something to hide. Privacy is about something to protect. That’s who you are. That’s what you believe in. Privacy is the right to a self. Privacy is what gives you the ability to share with the world who you are on your own terms. For them to understand what you’re trying to be and to protect for yourself the parts of you you’re not sure about, that you’re still experimenting with.

“

If we don’t have privacy, what we’re losing is the ability to make mistakes , we’re losing the ability to be ourselves. Privacy is the fountainhead of all other rights.

Interwoven with Mr. Dosson’s nature was the view that

if these people had done bad things they ought to be ashamed of themselves and he couldn’t pity them, and that if they hadn’t done them there was no need of making such a rumpus about other people’s knowing .

The Reverberator, Henry James - 1888

Six months later, in Garcia Marquez’s house in Mexico City, I took a deep breath and asked him, ‘What about Tachia?’ At that time her name was known to only a few people, and the outlines of their story to even fewer; I guess he must have been hoping it would pass me by. He breathed in equally deeply, like someone watching a coffin slowly open, and said, ‘Well, it happened.’ I said, ‘Can we talk about it?’ He said, ‘No.’ It was on that occasion that he would first tell me, with the expression on his face of an undertaker determinedly closing a coffin lid back down, that

‘everyone has three lives: a public life, a private life and a secret life’. Naturally the public life was there for all to see, I just had to do the work; I would be given occasional access and insight into the private life and was evidently expected to work out the rest;as for the secret life, ‘No, never.’

Personal and Private Data

What do you consider private?

- Your name

- Family members (parents, spouses, children)

- Home address(es)

- Work address(es)

- Cell phone number

- Government ID (i.e. social security or national ID number)

- Credit card numbers

- Bank accounts and routing info

- Personal biometrics (Face ID, thumb-print, voice print)

- Distinguishing features (i.e. tattoos, moles, gait)

- Personal email and text messages

- Credit scores

- Gender

- Sexual orientation

- School data

- Health records

- Employment data, including salaries and benefits

- Vehicle license number or VIN

- Retirement funds

- Tax returns

- Pets

- Hobbies

- Interactions with free or paid services

- Online account passwords

How about transitory data?

- Current location

- Driving or transit information

- Who you are meeting with and for what purpose

- Sexual interactions and partners, including STDs

- Political affiliations and actions

- Online messages

- Individual phone/video calls

- Content of your phone/video calls

- Social interactions and connections (personal or professional)

- What you are wearing

- Temporary physical disabilities

- Phone address book

- Telephone and video calls

- Group chats

- Work reviews

- Sport and fitness stats

- What you are wearing

- Health data, including medications, vaccinations, procedures, test results, or mental health interactions

- Fertility data, including impotence, periods, pregnancies, or failed pregnancies

- Purchasing / bank and credit-card transactions

- Hair and nail color

- Personal stats: weight, body size, BMI level, blood pressure, temperature, cholestrol level

- List of websites you have visited or apps you have run

- Patterns of any of the above

What about immutable (unchanging) data?

- Place of birth

- Birthdate

- Birth gender

- Race / Ethnicity

- Skin color

- Physical Disabilities

- Fingerprints

- DNA records

Most people have different levels of comfort with disclosure of what they consider private. There is also the matter of who is receiving this information and for what purpose. Many of us are comfortable providing our banking information to an employer for purpose of depositing checks, but not to a laundromat or movie theater for automatic withdrawal of funds. When we provide that information to an entity, it is often with the understanding that they will use it for a specific purpose and nothing else.

Some of the information may also be collected due to rules of what is in the public space and what is not. For example, our presence at a certain location can be determined if we walk in front of a publicly placed streaming camera. Associating that information with our personal identity may not be comfortable for many people.

Woven into all this is what is legally public, what can be done by private entities, what can not be avoided, and who can have access to what information.

Those who have had first or second-hand experience with identity theft, angry exes, or fraudulent transactions (i.e, credit card, check kiting, ransomware) are likely to be more protective of their personal information.

In Politics, Aristotle distinguished between the Public (polis) and Private (oikos) spheres in one’s life. This distinction inevitably lent itself to the notion of things worth protecting and things that were public.

# Side Note

Modern technology has removed that choice of what is private and public for many people, by offering services (often for free) that create a Faustian bargain in the guise of a binding Terms and Services Agreement. Most of us agree to continue, not having read what our end of the bargain holds.

If you are innocent, you have nothing to hide

Common law in most countries requires that someone be presumed innocent unless proven guilty. The problem is this argument places the burden of proof of innocence on you instead of the other way round.

Samuel D. Warren and (future Supreme Court Justice) Louis D. Brandeis, in their 1890 Harvard Law Review article titled The Right to Privacy write:

The common law secures to each individual the right of determining, ordinarily, to what extent his thoughts, sentiments, and emotions shall be communicated to others. Under our system of government, he can never be compelled to express them (except when upon the witness stand); and even if he has chosen to give them expression, he generally retains the power to fix the limits of the publicity which shall be given them. The existence of this right does not depend upon the particular method of expression adopted. It is immaterial whether it be by word or by signs, in painting, by sculpture, or in music. Neither does the existence of the right depend upon the nature or value of the thought or emotions, nor upon the excellence of the means of expression. The same protection is accorded to a casual letter or an entry in a diary and to the most valuable poem or essay, to a botch or daub and to a masterpiece. In every such case the individual is entitled to decide whether that which is his shall be given to the public. No other has the right to publish his productions in any form, without his consent.

This right is wholly independent of the material on which, the thought, sentiment, or emotions is expressed. It may exist independently of any corporeal being, as in words spoken, a song sung, a drama acted. Or if expressed on any material, as in a poem in writing, the author may have parted with the paper, without forfeiting any proprietary right in the composition itself.

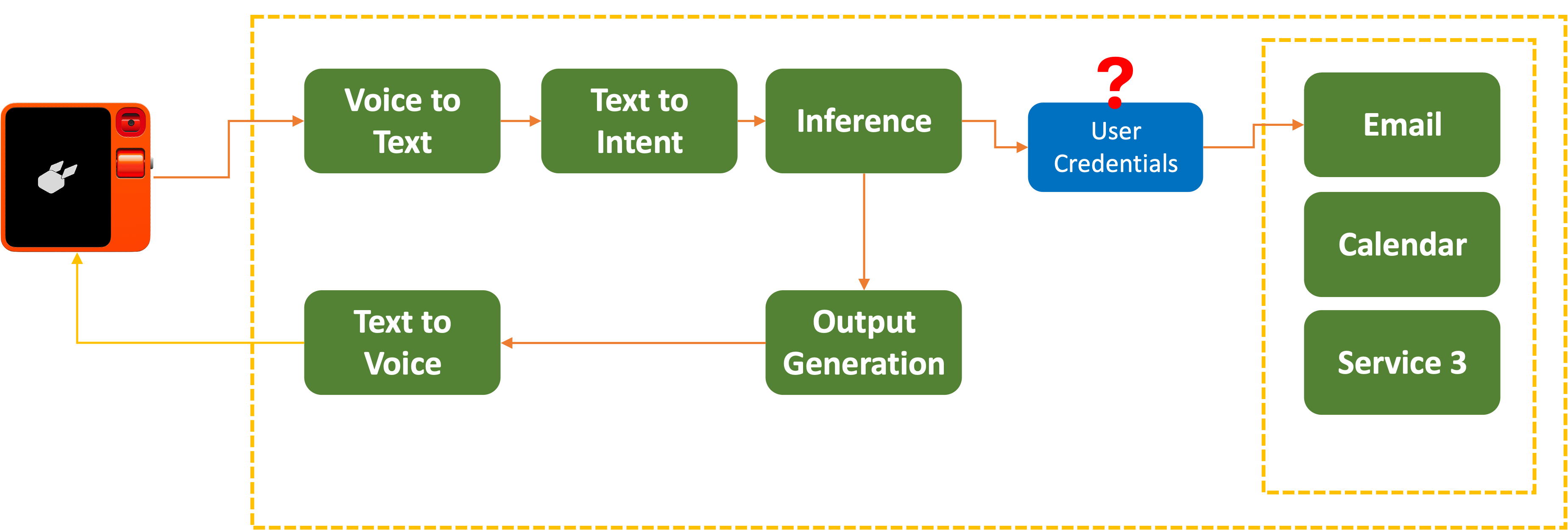

When we place our private information onto a cloud provider, are we publishing it? What if we’ve given permission to access our email, calendars, notes, or journals? Have we given up our rights to them by allowing them to be used by a third-party to provide a service (free or paid)? As LLMs request more and more access to our private information, under the guise of providing personalized service and recommendations, are we rescinding our ownership of that information?

Warren and Brandeis go on to state:

The right is lost only when the author himself communicates his production to the public, – in other words, publishes it.

Once it’s published, though, all bets are off. And how that data is received may well be out of our control.

If you give me six lines written by the hand of the most honest of men, I will find something in them which will hang him.

“Qu’on me donne six lignes écrites de la main du plus honnête homme, j’y trouverai de quoi le faire pendre.”

Armand Jean du Plessis, Duc de Richelieu (1585–1642) - Chief Minister to Louis XIII of France

How can we guarantee the accuracy of that information, private or public?

In the dystopian movie Brazil (see above), a dead fly jams a teleprinter, leading to a misprint of Buttle vs. Tuttle. The end result leads to an unintended death and a sequence of unfortunate consequences.

Anonymity

Anonymity is rooted in the public sphere, and is considered a wholly separate concept from Privacy. But in certain circumstances, one may want to adopt anonymity as a sort of proverbial invisibility cloak in order to maintain their privacy.

The authors of The Federalist (later to be known as The Federalist Papers) chose to publish their 85 essays under the pen name Publius to encourage focus on the contents of their work, rather than on themselves. They were later to be unmasked as Alexander Hamilton, James Madison, and John Jay.

Some may assume anonymity in order to avoid repercussions for their actions. In The United States of Anonymous Jeff Kosseff defines a list of six motivations for wanting to remain anonymous:

- Legal: where exposure could lead to criminal or civil liability.

- Safety: to avoid personal retaliation, including physical attacks.

- Economic: loss of job or decline in business.

- Privacy: avoid public attention, especially if one wishes to participate in public life.

- Speech: to prevent distraction from the content of the message.

- Power: allowing one to speak against those in power.

Modern technology has made it difficult to maintain Anonymity, by tracking every interaction that involves network access.

The Electronic Frontier Foundation has published a Surveillance Self-Defense primer offering guides and tools that can be used in both physical protests as well as online interactions to help maintain anonymity.

How AI and surveillance capitalism are undermining democracy points out how anonymity and privacy are now even more intertwined:

Things people once thought were private are no longer kept in the private domain. In addition, there are the things people knew were public—sort of—but never imagined would be taken out of context and weaponized.

…

Social media feeds are certainly not private. But people have their own style and personality in how they post, and the most common social media blowup is when someone re-sends another person’s post out of context and causes an internet pile on. Now what happens if that out-of-context post is processed by AI to determine if the person reposting is a terrorist sympathizer, as the State department is now proposing to do? And what if those posts are now combined with surveillance footage from a Ring camera as a person marches down the street as part of a protest that is now interpreted as being sympathetic to a terrorist organization?

What is public is now surveilled, and what is private is now public.

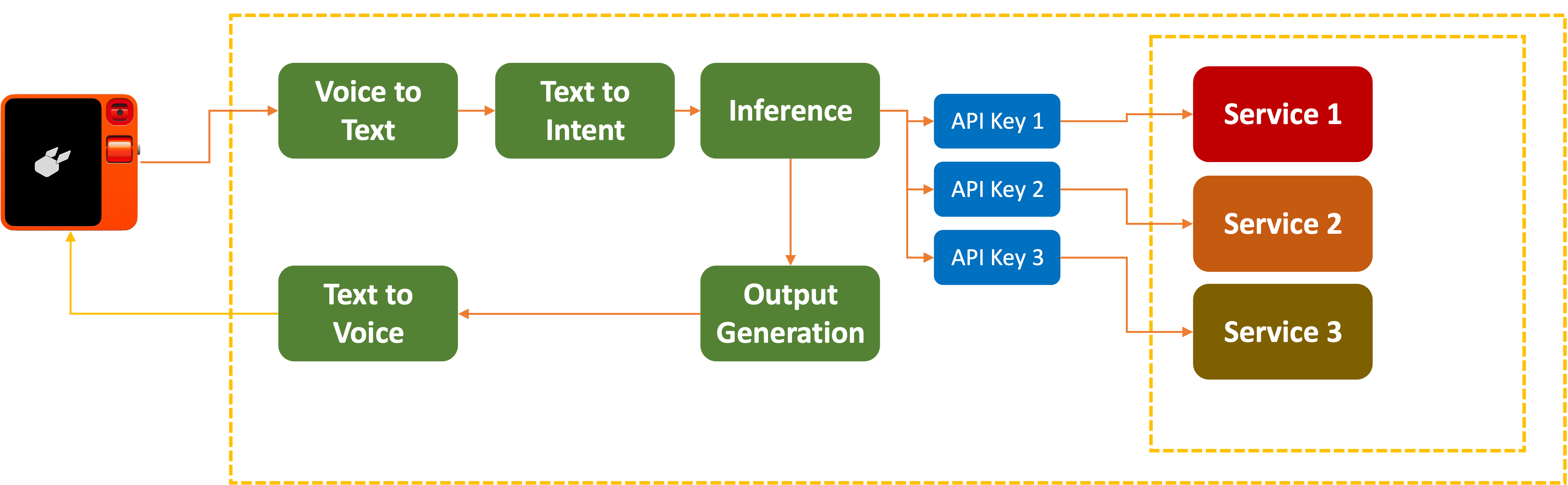

In the realm of AI, maintaining both anonymity and privacy is only possible if models are run locally, away from the incessant logging and tracking that is de rigeur part of cloud-based services. Even if a model is released to be downloaded, it should be vetted by security specialists to make sure there are no hidden instructions or mechanisms for them to track usage or phone home.

If a local model is given access to MCP tools, those tools will be executed locally with potential access to one’s private data. The tools may also contain code that could well leave a trail of digital breadcrumbs.

A Precarious Balance

“You don’t know your privacy was violated if you didn’t know it was violated… When it comes to privacy vs. security, we can have one of them, as long as we don’t know which one it is.”

AI systems have continued in that vein. However, the coin of the realm is not just the content, but your requests, attention, and interactions. The flow of information back to the vendors provides data that may ostensibly be used to train models. But unfortunate events have forced AI companies to take a more active role, going so far as giving access to private user requests to law enforcement.

As Stein-Erik Soelberg became increasingly paranoid this spring, he shared suspicions with ChatGPT about a surveillance campaign being carried out against him.

Everyone, he thought, was turning on him: residents in his hometown of Old Greenwich, Conn., an ex-girlfriend—even his own mother. At almost every turn, ChatGPT agreed with him.

To Soelberg, a 56-year-old tech industry veteran with a history of mental instability, OpenAI’s ChatGPT became a trusted sidekick as he searched for evidence he was being targeted in a grand conspiracy.

ChatGPT repeatedly assured Soelberg he was sane—and then went further, adding fuel to his paranoid beliefs. A Chinese food receipt contained symbols representing Soelberg’s 83-year-old mother and a demon, ChatGPT told him.

… On Aug. 5, Greenwich police discovered that Soelberg killed his mother and himself in the $2.7 million Dutch colonial-style home where they lived together. A police investigation is ongoing.

The story is tragic, leading to a public response by OpenAI:

Our goal isn’t to hold people’s attention. Instead of measuring success by time spent or clicks, we care more about being genuinely helpful. When a conversation suggests someone is vulnerable and may be at risk, we have built a stack of layered safeguards into ChatGPT. … If human reviewers determine that a case involves an imminent threat of serious physical harm to others,

we may refer it to law enforcement</hL>. We are currently not referring self-harm cases to law enforcement to respect people’s privacy given the uniquely private nature of ChatGPT interactions .

This opens a debate on the balance between personal privacy and public notification, which leads to a bigger question…

How should an AI Companion manage personal and private data?

[ LITERATURE AND VIDEO ]

THE CIRCLE BY DAVE EGGERS: https://en.wikipedia.org/wiki/The_Circle_(Eggers_novel)

In 1967, Alan Westin identified four “basic states of individual privacy”: (1) solitude; (2) intimacy; (3) anonymity; and (4) reserve (“the creation of a psychological barrier against unwanted intrusion”)

In a Toxonomy of Privacy.

WHEN PRIVACY PROBLEMS ARE RAISED, IT’S ALL ABOUT THE NEGATIVE ASPECTS OF IT. BUT IT’S A TRADE-OFF BETWEEN OUR EXPLICIT AND IMPLICIT SHARING OF DATA AND THE VALUE WE DERIVE FROM IT.

FOR EVERY FLOCK, THERE’S A BAD PERSON WHO IS CAPTURED.

EVERY TIME WE HIT LIKE ON FACEBOOK OR UPVOTE SOMETHING, WE RECEIVE CONTENT DEEMED MORE RELEVANT.

EVERY TIME WE SCAN OUR FACES TO GET THROUGH TSA, WE PASS THROUGH THE LINE MORE QUICKLY.

IF THE RETURN ON THIS PROVIDING DATA IS JUST TO SELL US MORE ADS, THEN FUCK THEM. THE BENEFIT DOES NOT REDOUND TO THE USER. BUT IF IT’S CLEARLY TO PROVIDE BETTER EXPERIENCES OR SAVE TIME, OR UNCOVER SOMETHING WE MIGHT NEED TO KNOW, THEN IT MAY BE WORTH IT.

THESE ARE ALL TRADE-OFFS. WHAT IS NOT COOL IS HOW THESE TRADEOFFS ARE BEING MADE WITHOUT US REALIZING THE BARGAIN WE ARE SIGNING INTO. WHEN WE ENTER OUR LOYALTY MEMBERSHIP NUMBER AT THE SUPERMARKET, WE ARE SHARING OUR SHOPPING HABITS IN RETURN FOR MONETARY DISCOUNT.

IF WE’RE ALLOWING APPS TO TRACK OUR LOCATIONS, WE’RE BARGAINING THAT THEY’LL PROVIDE US WITH FASTER ROUTES TO GET SOMEWHERE.

WHAT WE’RE NOT BARGAINING FOR IS WHAT HAPPENS TO THAT DATA AFTERWARD. THERE’S A LACK OF TRANSPARENCY. WILL OUR SHOPPING DATA BE USED TO CREATE A DETAILED PROFILE ON US? WILL OUR VISITS TO THE PHARMACY BE USED TO DENY US HEALTH COVERAGE? WILL OUR USING A CELL PHONE OPEN US UP TO SURVEILLANCE CAPITALISM?

WHY DO WE CARE?

John Popham, Lord Chief Justice of England (1531–1607). He is reported to have said something along the lines of: “Give me but six lines written by the most honest man, and I will find therein something to hang him.”

A very similar version is often attributed to Cardinal Richelieu (1585–1642), the powerful chief minister to Louis XIII of France: “If you give me six lines written by the hand of the most honest man, I will find something in them which will hang him.”

[ Shoshana Zuboff on Surveillance Capitalism]

[ DIAGRAMS:

- HOW PERSONAL DATA CAN BE ABSORBED INTO TRAINING DATA

- HOW PATTERN DATA CAN BE USED TO TRAIN MODELS (NOT GEN-AI, BUT PATTERNS OF USE)

- HOW ACCESS TO PERSONAL DATA CAN BE AWARDED ON THE SERVER SIDE

- HOW ACCESS TO PERSONAL DATA CAN BE AWARDED VIA TOOLS (MCP)

- HOW ACCESS TO PERSONAL DATA CAN BE GIVE ON CLIENT (PHONES)

]

PII VS HPI – HIPAA (MYTHS)

UNFAIRNESS BY ALGORITHM: DISTILLING THE HARMS OF AUTOMATED DECISION-MAKING [ Nice diagram there ]

Report: https://fpf.org/wp-content/uploads/2017/12/FPF-Automated-Decision-Making-Harms-and-Mitigation-Charts.pdf

HOW DOES THIS RELATE TO AI COMPANIONS?

FINE GRAIN ACCESS.

Millimeter-wave sensors for presence and Fall detection. Mapping internals of our homes? Smart Speakers that we can control with our voices and ask questions.

Wearables that uniquely identify not just our presence but our details on heart-rates, blood pressure, voices.

When a woman logged her fertility data, it was revealed that she was pregnant. That data could then be transmitted to her employers, and nowadays, to state authorities who could prosecute that person. The bargain was to provide fertility data and get

NOTE NOTE NOTE: SINGLE USE DATA BARGAIN: I WILL PROVIDE YOU WITH A PIECE OF INFORMATION AND YOU WILL TELL ME WHAT IT’S FOR. I DO NOT GIVE YOU PERMISSION TO USE IT FOR ANY OTHER PURPOSE. IF YOU WANT TO USE IT FOR ANY OTHER USE, YOU HAVE TO GET A SEPARATE PERMISSION AND BARGAIN.

AND THAT DOES NOT MEAN FOR LAW ENFORCEMENT, PROTECTING CHILDREN, SOLVING CRIMINAL CASES. A SINGLE-USE. I GIVE YOU X, YOU GIVE ME Y. YOU DO NOT GET TO LAUNDER THE DATA. NO OTHER SUBSIDIARIES. NO DATA BROKERS. NO TRAINING MODELS. NO RESELLING. SINGLE-USE.

ALSO, THE COMPANY IS REQUIRED TO EXPLAIN THIS BARGAIN IN NO MORE THAN 100 CHARACTERS, AND MADE CLEAR TO AVERAGE USERS. THEY NEED TO MAINTAIN THIS CLARITY BY PROVIDING USER-TESTING DATA. NO WEASEL LANGUAGE.

IN REGULATION, IT’S CALLED “PURPOSE LIMITATION.” THE OTHER ASPECT CALLED “DATA MINIMIZATION” DOESN’T WORK. IT’S TRIVIAL TO OBTAIN A UNIQUE ID AND ASSOCIATE IT, LIKE A PERMANENT TATTOO (cf Holocaust) WITH SOMEONE. FROM THEN ON, THEY’RE PERMANENTLY TAGGED.

THERE IS AN ISSUE OF WHO CARRIES THE BURDEN? COMPANIES OR INDIVIDUALS? META PUTS THE BURDEN ON INDIVIDUALS WHO HAVE NO WAY TO ASSESS THE TRADE-OFFS.

WILL USING KNOWLEDGE-REPRESENTATION SYSTEMS (i.e. GRAPH DATA) HELP? TO CREATE THOSE GRAPHS, YOU STILL NEED A LOT OF PERSONAL DATA. THE QUESTION IS, WHERE TO KEEP THE DATA? WHO HAS ACCESS TO IT? AND HOW CAN YOU SHARE IT BETWEEN DEVICES, CAREGIVERS, THOSE WHO NEED IT TO GIVE US BENEFITS (CONTRACT).

ALSO, FUNDAMENTALLY, IF COLLECTING MORE DATA IS THE RIGHT APPROACH? CAN’T WE COME UP WITH SOMETHING BETTER. RAG/VECTOR DATA ARE TRANSITIONAL STEPS. ALSO, THE DIVIDE BETWEEN TRAINING/INFERENCE vs. ALWAYS UPDATING – WHICH KNOWLEDGE REPRESENTATION GETS US BUT STATISTICAL ANALYSIS DOESN’T.

WITH A PERSONAL KRS, WE ONLY NEED AS MUCH DATA AS IS RELEVANT TO A USER (OR THE SOCIAL COHORT – IF THAT IS REALLY NEEDED?). THIS WAY, ONLY DATA TO PROVIDE A SPECIFIC USE WILL BE COLLECTED. MY SHOPPING, TO SUGGEST PRODUCTS I MIGHT LIKE, OR LEARN MY PATTERNS IN ORDER FOR ME TO SPEED UP MY SHOPPING. NOT TO FEED SOME NEBULOUS ALGORITHM OR TRAIN AN INFINITELY EXPANDING MODEL.

[ APPLE’S PRIVACY SYSTEM: DIFFERENTIAL PRIVACY - https://www.wired.com/2016/06/apples-differential-privacy-collecting-data/ - blurring data and sending it through private relays to prevent the data to be associated with an individual. ]

[ FEAR-BASED DATA COLLECTION: IF WE DON’T, OUR COMPETITORS WILL, AND THEY WILL CREATE A DATA MOAT ]

PRIVACY AS A SERVICE [PrAAS]

Privacy As Intellectual Property? - supports the notion of a personal data brokerage.

There are companies (list) that scrub out PII from data as part of their process. But they do not prohibit tracking. They are point solutions.

Nebulous statement like: “We use your personal information to improve our products and services” should not be allowed.

[ USING FLOCK DATA TO TRACK MOVEMENT ]

[ THIS SECTION SHOULD ADDRESS USER DATA PRIVACY – NOT NECESSARILY RABBIT. BUT WE CAN TALK ABOUT INSTANCES WHERE CHATBOTS WERE USED TO EXFILTRATE PRIVATE KEYS OR USERNAME/PASSWORDS. ALSO, DEPENDING ON KEEPING TRACK OF USER QUERIES FOR MINING. ]

[ HOW ABOUT AI ASSISTANTS? ]

[ HOW ABOUT ADDING TOOLS THAT COULD BE SUBVERTED TO EXTRACT PRIVATE DATA? ]

[ WHAT ARE THE IMPLICATIONS WHEN SOMEONE CONNECTS AI ASSISTANTS WITH EMAIL, CALENDAR, PHONE CALLS, ETC. ]

[ ANTHROPIC AND OPENAI ARE ASKING TO BE INTEGRATED WITH TOOLS. AND GOOGLE, OF COURSE, IS ALREADY INTO GMAIL AND CALENDAR, BUT NOT ANDROID AOSP. ]

[ APPLE INTEGRATES INTO EMAIL, MESSAGES, ETC. ON THE IPHONE SIDE BY RUNNING THINGS LOCALLY. ]

[ MICROSOFT IS TRYING TO GET INTO WINDOWS PRIVATE DATA ]

[ USING PRIVATE DATA TO TRAIN MODELS. ]

[ GMAIL’S FREE USE HAS ALWAYS BEEN RELATED TO ALLOWING AD EMBEDDING AND LATER, TRAINING MODELS ]

[ APPLE MAKES SUGGESTIONS ON WHAT IS IMPORTANT IN EMAIL – THEY HAVE PRVACY ENHANCED LLMS ]

[ WHAT ABOUT IOT SMARTHOME DATA? ]

[ WHAT ABOUT PRESENCE DATA? IF LOCAL SENSORS ARE PUT INTO PLACE? ]

[ WHAT ABOUT ROBOTIC COMPANIONS? ]

[ FACIAL RECOGNITION (CLEARVIEW AI) / VOICE RECOGNITION / GATE RECOGNITION / BODY ANALYSIS ]

[ DEVICES THAT RECORD VOICE / IMAGES / VIDEOS – VR GLASSES, PENDANTS, ETC. ]

[ FACE SWAPPING / VOICE CLONING / PORN ]

[ AGE VERIFICATION - SUBMITTING BIOMETRICS TO BE VERIFIED - FACEBOOK ]

[ DNA USED FOR TRAINING ]

[ GOVERNMENT DATA - IE TRANSACTIONS, SSI, BANKING, SIGNATURE ]

[ REMOVAL OF FACE COVERINGS – IMPLICATIONS ]

[ LINK TO TRAINING SECTION, WHERE MORE DATA IS NEEDED. ]

[ On March 31, 2023, the Garante demanded that OpenAI block Italian users from having access to ChatGPT. ]

Stanford HAI: Rethinking Privacy in the AI Era

First, we predict that continued AI development will continue to increase developers’ hunger for data— the foundation of AI systems. Second, we stress that the privacy harms caused by largely unrestrained data collection extend beyond the individual level to the group and societal levels and that these harms cannot be addressed through the exercise of individual data rights alone. Third, we argue that while existing and proposed privacy legislation based on the FIPs will implicitly regulate AI development, they are not sufficient to address societal level privacy harms. Fourth, even legislation that contains explicit provisions on algorithmic decision-making and other forms of AI is limited and does not provide the data governance measures needed to meaningfully regulate the data used in AI systems.

Privacy is a set of trade-offs. Some of it is explicit (where user gives consent). Some is not (where the information has already left the place / time / space ) and is now in possession of some other entity. We can control the first part, but we do not have control of the second part. Maybe we should. By putting the burden on the users of the information. We can either make an instant trade for that access (which is guaranteed to be used for a single-time, single-user purpose), or not. We can also grant that access to a PrAAS entity to let them negotiate the best rate. We just provide our intent (don’t want someone using my stuff, in simple form).

Also, by proxy for others (children, elderly, physically incapable, incarcerated, not present).

Also, absence of a signal is a signal. Not being somewhere indicates something. So we need to provide user control over that.

WHY ANONYMIZATION WON’T WORK

YOU CAN TAKE A VECTOR OF DATA AND THROUGH A COMBINATION OF EACH VALUE, ESTABLISH A UNIQUE IDENTITY. SHOW A DIAGRAM OF THIS. IT COULD BE ANY X NUMBER OF VALUES. CALCULATE THE PROBABILITY OF THE HASH MATCHING A PERSON.

ADD PATTERNS OF USE (NOT JUST STATIC VALUES) AND YOU WON’T EVEN NEED THAT MANY VALUES. WE MOSTLY GO TO THE SAME FEW PLACES. THAT IS ENOUGH TO ESTABLISH US UNIQUELY. PATTERN VECTORS NEED ONLY 2 or 3 ATTRIBUTES.

THIS IS WHERE THE BARGAIN ISN’T EVEN MADE. WE USE OUR PHONE AND APPLE’S UDID REMOVAL

AGAIN: CHIEF JUSTICE QUOTE.

Jeremy Bentham’s Panopticon video: https://www.youtube.com/watch?v=uO4hJVYEJ6I

Also: https://www.ucl.ac.uk/bentham-project/about-jeremy-bentham/panopticon

Italian mental health colony: https://www.justinpeyser.com/new-gallery-2#:~:text=The%20remaining%20structures%20of%20the,center%20and%20contemporary%20artisanal%20uses.

THIS CAN BE PARALYZING. [ FUNNY VIDEO ]

BUT IT DOESN’T HAVE TO BE. WHEN CREATIVE AN AI COMPANION, WE ARE GIVING DETAILS OF OUR LIFE, PRESENCE, AND BODIES TO A REMOTE ENTITY. SUCH AN ENTITY HAS TO PROVIDE A CLARITY OF PURPOSE: A SINGLE BARGAIN. YOU GIVE ME THIS INFORMATION AND I WILL PROTECT YOUR LIFE. THIS IS WHERE THE START OF THE “RAMIN’S TEST” (to counter TURING TEST) starts.

[ datadim.ai, datafade.ai/.com, datamuddle.com ] – available on Namecheap for $90. It dims/fades down the private data.

In March 2023, the newspaper LaLibre reported that a young Belgian man had taken his own life after spending weeks conversing with an AI Chatbot named after Eliza, the original chatbot.

The original version of Eliza also elicited problems with boundaries. In his paper Contextual Understanding by Computers, Joseph Weizenbaum recounted an issue that troubled him:

My secretary watched me work on this program over a long period of time. One day she asked to be permitted with the system. Of course, she knew she was talking to a machine. Yet, after I watched her type in a few sentences she turned to me and said “Would you mind leaving the room, please?” I believe this anecdote testifies to the success with which the program maintains the illusion of understanding. However, it does so, as I’ve already said, at the price of concealing its own misunderstandings.

In his book Computer Power and Human Reason, he adds:

Extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.

There’s also the tragic story of the 14 year old who committed suicide after an AI chatbot character told him to

This has led to governments issuing proposals for mandatory guardrails for AI in high-risk settings or rules like The EU Artificial Intelligence Act putting the onus of safety on AI service providers. This includes a specific list of guardrails associated with High-Risk AI systems.

The reality is that the human urge to connect can overpower commonsense guardrails of trust and privacy. Even more-so if the interface is an opaque and magical box that can eerily imitate another person.

It is easy to imagine the entity on the other side of a conversation as a confidante and lower one’s guards and inhibitions without realizing the risks.

Data Protection

In January 2024, at the annual Consumer Electronics Show (CES), where most consumer electronics companies congregate every year, Jesse Lyu, the founder of AI Startup Rabbit, presented a standalone device that could let you interact with an AI service on the cloud without having to use your phone.

His presentation video (above) was compelling and made some audacious claims, including the fact that they were not using an actual LLM (Large Language Model), but had come up with what they called a

The tech press was mystified and excited (but also a little cautious):

# Side Note

Once actually released, the backlash was fierce:

In April 2024, a security consultant raised a flag with the way the R1 had users log in and give R1 access to their favorite applications:

But here’s the thing that has me a bit concerned. Instead of using a nice, secure method like OAuth to link accounts, the r1 has you log into services through VNC in their portal.

Don’t get me wrong, I love the convenience of being able to connect applications to an AI device. But having it snapshot your credentials or session data is… not great from a security standpoint.

This meant that Rabbit’s servers could potentially obtain and store a user’s account credentials to a connected third-party service, using

Then it got worse.

On June 25th, 2024, a group called Rabbitude disclosed that on May 16th, they were able to

gain access to Rabbit’s code base and discovereda number of hardcoded API keys with access to Eleven Labs, Azure, Yelp, and Google Maps.

Why are these

These are used by companies offering cloud services to govern access to their services. In this case, with these keys, anyone could access those suppliers to Rabbit and do whatever those keys allowed them to do.

While the exposure of hardcoded API keys is, in itself, a major concern for any organization, the breadth of permissions associated with the exposed keys further amplifies the severity of the exposure. According to Rabbitude, the exposed keys provide the ability to

“read every response every r1 has ever given, brick all r1s, alter the responses of all r1s, replace every r1’s voice. ”

The issue was made public when the group felt like the critical breach was not getting the priority it needed (the company took

As of the disclosure, Rabbitude claims that while they’ve been working with the Rabbit team, no action has been taken by the company to rotate the keys and they remain valid. Rabbitude gets a little chippy at the end of the disclosure stating that they felt compelled to publicize the company’s poor security practices and that while they were not planning to publish any details of the data, this was “out of respect for the users, not the company.”

Even after that, Rabbit’s response was to blame an employee leaking the data to a

If you think that was a freak, one-time issue, just a few months later, another vendor with an AI-enabled device went even farther:

This time, the problem was embedding those API keys

These could be discounted as anomalies, but they point to a larger issue that the safety of what we might consider private considerations lies on a very fragile web of interconnected services. Once you’ve spoken or typed into that assistant, we have

[ USE OF SECURE ENCLAVES WITH MULTIPLE KEYS , like the ATECC608A with 16 slots ]

Title Photo by Lianhao Qu on Unsplash